Text Toys and Glitch Poetics

"Wordplay" workshop invited talk at NAACL 2022

Lynn Cherny

@arnicas

I've added speaker notes/commentary in pink boxes. Press "space" to advance thru all the slides. THE VIDEO IS POSTED HERE.

About Me

- PhD in Linguistics from Stanford (my diss was about chat in a MUD)

- Worked in research, then industry, as a UX designer and then data scientist, mostly doing NLP now

- Company history includes Adobe, Autodesk, TiVo, Solidworks, The Mathworks, and a couple professor gigs

- Past few years I worked at a toxic speech detection startup, and an AI game startup

- Consult now as a ML Artist in Residence at Google Arts & Culture and moonlight as a volunteer moderator on Midjourney

More relevantly... my artist "play" history that influences me

- I come from tv fandom and I hung out and built stuff in MUDs

- I made fan videos: remixes of tv shows set to music, often focused on a non-straight view of the show

- I wrote stories: I put the characters in other universes and made them gay

So my "artist statement" is that I like making remixes/mashups, using AI models and texts... usually with interactivity

Fan (and Music) "Remixes"

-

adding to from another source

-

deleting from

-

distorting

-

re-cutting

-

redoing for another audience

In some ways, we can consider all AI remix, due to the training sets.

Games lie between stories and toys on a scale of manipulability. Stories do not permit the audience any opportunity to control the sequence of facts presented. Games allow the player to manipulate some of the facts of the fantasy, but the rules governing the fantasy remain fixed. Toys are much looser; the toy user is free to manipulate it in any manner that strikes his fancy. The storyteller has direct creative control over his audience’s experience; the game designer has indirect control; the toymaker has almost none.

- Chris Crawford, The Art of Computer Game Design

story

game

toy

I would like to make games into stories/poems,

stories/poems into toys,

and build toys to support creating stories & poems & games.

story

game

toy

These are the kinds of side-project I like doing.

Some important pillars for this... all problems in some ways.

Tooling

User Interfaces

APIs to access giant models

Datasets/annotations

Custom model creation

The tools to build these things are really hard right now, for various reasons, if you are a "casual creator" (I'm much more techie than most people, but I'd like a world where more people can make their own toys/games/art especially with AI.)

UI via JS is especially painful right now due to the complexity of frameworks and the tool/build eco-system.

API access isn't possible for all the "big models" that are interesting, and can be expensive for the ones with API access.

Building a data set, labeling it, and training from it are technical, time-consuming, and costly. But we need more custom data sets, and everyone should be able to make their own, IMO.

Sigal Samuel wrote about using a language model called GPT-2 to help her write her next novel. She thought that the near-human outputs of language models were ideal for fiction writers because they produced text that was close to, but not quite exactly, human writing. This near-human writing “can startle us into seeing things anew”.

How Novelists Use Generative Language Models: An Exploratory User Study (Calderwood et al 2020)

Disappointing Projects of Mine

I mean, for some specific interesting reasons, before we do less disappointing ones

"This Castle Does Not Exist"

an internal project at Google Arts & Culture Lab, that never turned into anything

It didn't go anywhere for various reasons, not just because of what I say here.

2 AI Models Tuned...

StyleGAN 2 (churches LSUN) model tuned on images of French castles

GPT 2 tuned on wikipedia articles about them - history, architecture, etc.

results were really quite good...

the text was too good.

ok, some irony there...

this is 2 years ago; the results would be even better today. note the irony on the text but it's hard to spot.

Only occasionally "off the rails," in a good way...

this project could use more fun text in general; GPT-3 or other LLM with the right guides would improve that now.

"Dwarf2Text":

Dwarf Fortress Data Mining

you can read more about this here in the context of looking at text generation (data2text) more broadly

(or, turning a game db into a story)

This was a project I did for NaNoGenMo - National Novel Generation Month, which features a lot in these slides.

Asu Fellmunched was a male human. He lived for 220 years. He was largely unmotivated. He had no skills. Unhappily, Asu Fellmunched never had kids. He was a member in the The Council Of Stances, an organization of humans.

A Boring Profile "Narrative"

I turned stats into text facts, and added a tiny bit of color to the text, like "Unhappily" re no kids.

Zotho Tattoospear was a male human. He had no goals to speak of. He was rather crap at discipline. He lived for 65 years. He was a member of 4 organizations. He had 5 children. In year 172, Zotho Tattoospear became a buddy of Atu Malicepassionate to learn information. Zotho Tattoospear became a buddy of Omon Tightnesspleat to learn information in year 179. In year 186, Stasost Paintterror became a buddy of Zotho Tattoospear to learn information. Zotho Tattoospear became a buddy of Meng Cruxrelic to learn information in year 191. Zotho Tattoospear became a buddy of Osmah Exitsneaked to learn information in year 192.

A Bio That Raises Questions But Is Lost in Dull Repetition

some kind of cool spy? or villain

At a meta-fact level, there is no model or intelligence summarizing the stats to make a big-picture point, like, this guy is a spy.

I should have done more story data mining...

Umap layout of

race/gender/age/social links

Look at outliers,

understand the groups

childless male dwarves

chewed up in battle

I have both slides and a Medium post about this project... plus code in Nanogenmo 2021

STORY

Data

Structure

A Data-Driven

Story

Dwarf Fortress

There could have been structure applied to the little histories, over time, to get a better narrative, too.

but also - how much is data fidelity vs. interest the goal?

I was going for data fidelity, but for NaNoGenMo maybe that was not a good choice. In any case, I wasn't using any LLM text gen to "add untrue color," which would've made it much spicier and a better read.

Why were these not fun?

The projects were not weird enough, in general.

Too few glitches, too little interactivity.

In my personal projects, if I'm not having fun, something is going wrong... otherwise why do them, right?

Types of "fun"

sense-pleasure,

make-believe,

drama or narrative,

challenge,

social framework,

discovery,

self-discovery and expression,

surrender

sense-pleasure,

make-believe,

drama or narrative,

challenge,

social framework,

discovery,

self-discovery and expression,

surrender

What can fun come from?

What makes something fun? Lots of things, but...

In my projects I'm mainly interested in the highlighted ones here

I didn't say this explicitly, but I am usually looking to see if a project makes me laugh. If I laugh at the result, it's because of a surprise that's delightful, and that makes it "fun," too. This might be my most important self-check metric.

Fun ~ Funny

Aside on other "game as story" projects

a digression on two NaNoGenMo game data writing projects I like, fyi

Both these projects are game playthroughs turned into narrative text, which I'm really into. Like a lot of NaNoGenMo output, they aren't necessarily fun to read in the end, though.

The snow never ceases.

I ski on autopilot, outside myself.

It feels like I've been skiing forever.

The landscape is blank. If I close my eyes I'll crash.

An infinity of ice. Before me is more of the same.

I've gone 132 meters now. And meters and meters to go.

Gravity controls me completely. I ski on autopilot, outside myself. These menacing, craggy boulders at every turn.

Half the trees are splintered dead. Just keep going... I have to push on. Yet they said this was the best skiing anywhere.

I'm going 9 meters per hour. I'm maneuvering around something, but I can barely see what.

Movement behind me; a noise. Whoosh, colder breeze, dusting up of snow. But when I turn, nothing. Just keep going...

Snow like static in the ears. The people passing by all look the same. No time to shiver.

I ask myself how I'm skiing. How does one even know?

A novel generated by playing SkiFree by Katstasaph, NaNoGenMo 2021

Mario stands up, brushes off his overalls, and gets ready for another go. Mario begins to slow down. Mario lands on solid ground. Mario lost his super mushroom power. Mario sees a Grey CheepCheep in the distance. Mario enters a new area. Mario finds himself underwater. Mario begins to slow down. Mario begins paddling. Mario sees a Grey CheepCheep in the distance. Mario treads water upwards. Mario treads water upwards. Mario treads water upwards. Mario sees a Grey CheepCheep in the distance. Mario treads water upwards. Mario sees a Blooper in the distance. Mario lands on solid ground. Mario treads water upwards. Mario begins to slow down. Mario lands on solid ground. Mario begins paddling. Mario treads water upwards. Mario collects a coin. Mario collects a coin.

arguably a bit similar to my Dwarf2Text though.

Bonus (sad) Content: Spindle

Alex Calderwood et al (ICCC 2022):

a tool to help write interactive fiction using a GPT-3 model fine-tuned on int fiction games.

But they can't figure out how to share the fine-tuned model. We have to redo that ourselves to use the code.

I was so disappointed I couldn't run it.

Story as game and toy

Making texts interactive

Perhaps a better question is whether stories can be fun in the way games can.

- Ralph Koster, Theory of Fun for Game Design

"Some Dim, Random Way"

Kevan Davis's NaNoGenMo of Moby Dick as a CYOA game (link)

I freaking love Kevan's CYOA Moby Dick. I wish I had thought of it. (Eee, the code seems to be PHP?) But: I will note that I think it works best as a kind of fan remix of a book you know well, as a new way to experience it.

It is definitely very, very funny.

That's the guy who turned Wikipedia into a text adventure game, btw

Raj's work, espec.

Bringing Stories Alive: Generating Interactive Fiction Worlds

(Ammanabrolu et al, 2020)

I tried to use this process and some of the code to turn fairy tales into games (the results are so poor I don't both showing here). It's really hard, or was then, to get good entities and relations from OpenIE on a fairy tale. I think other tools might be better for this now (e.g., Holmes).

re-Gendering Jane Austen

a WIP, from my NaNoGenMo 2021

Let people re-gender and rename the characters (all of them!), or take random mixes, and handle pronouns and coreference correctly.

This is a fan remix tool, without a UI yet (it's raw Python). With David Bamman's code and pointers, I used a lightly modified version of his command line tool to "fix" all the coreference and entity mentions in the book that the coref model got wrong (it was a painful process), allowing "replacements" as wanted. Sooooo many interesting NLP and semantics questions come up from this, I'll get it online and write about it when I get some fun-employment time.

It is a truth universally acknowledged, that a single woman in possession of a good fortune, must be in want of a husband.

this doesn't really suggest the enormity of the changes, funnily enough...

That opening sentence could belong to the same world as Austen was writing in.

What a contrast between her and her friend! Miss Darcy danced only once with Mrs. Hurst and once with Miss Bingley, declined being introduced to any other lady, and spent the rest of the evening in walking about the room, speaking occasionally to one of her own party. Her character was decided. She was the proudest, most disagreeable woman in the world, and everybody hoped that she would never come there again.

Amongst the most violent against her was Mr. Bennet, whose dislike of her general behaviour was sharpened into particular resentment by her having slighted one of his sons.

But it's a bisexual matriarchal universe...

But in fact I changed some of the binary genders but not all, so that women dance with women. Mr & Mrs Bennet have swapped their roles. They have 5 sons. Anything is possible.

... meant to enhance our perception of the familiar human condition — the thing we’re so used to that we’ve become blind to it — by making the familiar strange.

Art is...

My tool doesn't make "art" but it makes it fresh, and changes how the world looks. Also, it supports a vision of gender and relationship flexibility that we might want to read while retaining her impeccable style.

Image Poems

models talking to models, a proto version

I am weirdly obsessed with both image2text and text2image. Especially for artistic purposes, obviously.

Source image; call image api to get content keywords; search poetry db for relevant lines; randomly place...

"house" "building "structure"...

Google Vision API

randomly

combine

structure

lines

lines

lines

Poetry

The poetry part here is a sqlite db of lines of poetry, searched by words from the image API. (It's not a text gen LM, but it could be.)

Source images from Midjourney... a text2image diffusion model

"are we having fun yet?"

Surprisingly, yes... the combos of randomness and reductionism make it fun.

And is it funny? Often yes.

It's fast to run it, and it's interesting to check the output, and some are super and others are not. But it feels very entertaining to see the random output and juxtapositions on the image bg.

btw "Image2Poem"

a dead code research project -

code from 4 years ago,

I can't get it to run now.

(github, Best paper of ACM 2018)

(But there is a dataset there!)

Part of the tragedy of current creative work in AI - things go dead due to lib changes and interop bugs SO FAST. It would be faster for me to redo this from scratch than to try to update their code, IMO. And they have no incentive to update it, as researchers, I assume.

Word2Vec Poem Edits

a few years ago... pre-Covid...

visible in more detail in previous talk slides like PyData London

Making toys out of poems

This was a project where I used word2vec models over a local API, to allow you to edit poems by clicking on words and replacing them with "similar" words in the embedding space. It took me longer to make the react front-end than to do any other part of it, of course.

Original haiku by Bashō:

Another word2vec model

I worked on this for Kyle McDonald

Context is a sample of "good poetry" + previous few lines of generated text...

GPT-2 Telephone Game Poetry Generation

User inputs a word, e.g. "snow"

The model generates 2 lines containing it,

and updates the context prompt to contain those lines.

I need to credit Kyle with being clever on how to train the GPT-2 model to produce couplets that contain the given word in either line. It worked very well. This was all pre-GPT-3 and in any case, we couldn't have served such a large model with the compute we had and response times needed.

view me, my eyes, on this cold wall.

you will never move out of this picture

without a third leg and i promise you,

you'll always step out of this picture, your one-year detail.

we're talking about music but, you know, when you do, you might

find an item in the road that brings the whole picture

of truth, the last shade of the paint in a window's shade,

you'll know, but how can i tell you, how can i relate

the light that flickers in a mirror? the shadow of a hand.

move

move

detail

detail

item

item

relate

relate

miss

The blue words are randomly chosen as input to test the model and coherence. it's not too bad?

Is it fun?

I don't really know if it was in practice, although videos of people using it shows them seeming quite engaged. The opportunity for input and context response seems like a key element in the engagement here.

Is it funny?

Yes, definitely, it sometimes was. I really enjoyed reading all the test output. But it was sometimes toxic, too, so there was a filter list on input and output.

Telephone Translation Toy

A toy project in progress using Google Translate API

A "telephone" game is one in which messages are passed on, and may be distorted on the way. I love these, especially with AI models involved. So a bunch of related projects now...

Poem constructed of 48 translations into English of Dante’s opening lines of The Inferno.

This "data" poem is a fav of mine. It has been inspirational in a bunch of ways.

the spirit is willing but the flesh is weak

the vodka is good but the steak is not so great

possibly apocryphal linguistics story about MT from undergrad days

In the talk I had the vodka and steak reversed, woops. Anyway, it was Norbert Hornstein at UMD when I was an undergrad who told me about this translation issue. Aphorisms are hard :)

English

Hi

"hi"

French

Salut

"hi"

Korean

etc.

안녕

I constructed a little simulation in which balls collide and pass on their messages - translated into the language of the recipient. Black is English and starts the chain. My UI is too bad to show, but at the end it writes out a file of the collisions and translations, also all translated to English for inspection

var languages = {

"af":"Afrikaans", "ak":"Akan", "sq":"Albanian", "am":"Amharic", "ar":"Arabic", "hy":"Armenian", "as":"Assamese", "ay":"Aymara", "az":"Azerbaijani", "eu":"Basque", "be":"Belarusian", "bn":"Bengali", "bs":"Bosnian", "bg":"Bulgarian", "ca":"Catalan", "ceb":"Cebuano", "ny":"Chichewa", "zh-CN":"Chinese (Simplified)", "zh-TW":"Chinese (Traditional)", "co":"Corsican", "hr":"Croatian", "cs":"Czech", "da":"Danish", "dv":"Divehi", "nl":"Dutch", "en":"English", "eo":"Esperanto", "et":"Estonian", "ee":"Ewe", "tl":"Filipino", "fi":"Finnish", "fr":"French", "fy":"Frisian", "gl":"Galician", "lg":"Ganda", "ka":"Georgian", "de":"German", "el":"Greek", "gn":"Guarani", "gu":"Gujarati", "ht":"Haitian Creole", "ha":"Hausa", "haw":"Hawaiian", "iw":"Hebrew", "hi":"Hindi", "hmn":"Hmong", "hu":"Hungarian", "is":"Icelandic", "ig":"Igbo", "id":"Indonesian", "ga":"Irish", "it":"Italian", "ja":"Japanese", "jw":"Javanese", "kn":"Kannada", "kk":"Kazakh", "km":"Khmer", "rw":"Kinyarwanda", "ko":"Korean", "kri":"Krio", "ku":"Kurdish (Kurmanji)", "ky":"Kyrgyz", "lo":"Lao", "la":"Latin", "lv":"Latvian", "ln":"Lingala", "lt":"Lithuanian", "lb":"Luxembourgish", "mk":"Macedonian", "mg":"Malagasy", "ms":"Malay", "ml":"Malayalam", "mt":"Maltese", "mi":"Maori", "mr":"Marathi", "mn":"Mongolian", "my":"Myanmar (Burmese)", "ne":"Nepali", "nso":"Northern Sotho", "no":"Norwegian", "or":"Odia (Oriya)", "om":"Oromo", "ps":"Pashto", "fa":"Persian", "pl":"Polish", "pt":"Portuguese", "pa":"Punjabi", "qu":"Quechua", "ro":"Romanian", "ru":"Russian", "sm":"Samoan", "sa":"Sanskrit", "gd":"Scots Gaelic", "sr":"Serbian", "st":"Sesotho", "sn":"Shona", "sd":"Sindhi", "si":"Sinhala", "sk":"Slovak", "sl":"Slovenian", "so":"Somali", "es":"Spanish", "su":"Sundanese", "sw":"Swahili", "sv":"Swedish", "tg":"Tajik", "ta":"Tamil", "tt":"Tatar", "te":"Telugu", "th":"Thai", "ti":"Tigrinya", "ts":"Tsonga", "tr":"Turkish", "tk":"Turkmen", "uk":"Ukrainian", "ur":"Urdu", "ug":"Uyghur", "uz":"Uzbek", "vi":"Vietnamese", "cy":"Welsh", "xh":"Xhosa", "yi":"Yiddish", "yo":"Yoruba", "zu":"Zulu", "he":"Hebrew"

};

in use, though:

203|ms|it|Era la signorina Scarlett in biblioteca con la pipa

204|or|ku|Ew Miss Scarlett di pirtûkxaneyê de bi boriyê bû

204|el|ay| We can't speak to each other.

206|or|el|Ήταν η δεσποινίς Σκάρλετ στη βιβλιοθήκη με το σωλήνα

207|mt|ms|Ia adalah Miss Scarlett di perpustakaan dengan sangkakala

Greek → Aymara (a language of the Bolivian Andes)

The list of langs known to Google Translate API. But not all can be inter-translated, it seems.

listen: there is a good universe beside her; let's go listen: there is a good universe nearby; go listen: there's a damn good universe; gone Listen: Next door is a very good corner. So let's go Listen: The side door is a very good angle. Let's go listen: there is a damn good universe; go Please try listening. There is a very good universe around. go Please try to listen. There is a very nice corner on the side. Okay, let's go Listen: The side door is a very good corner. Let's go Listen: there is a very good environment. Let's go Listen: The neighbor has a very good angle. So let's go

English-Kurdish English-Albanian Kurdish-Finnish Belarusian-German Kurdish-Finnish German-Albanian Japanese-Albanian Japanese-Kurdish Somali-Kurdish Haitian Cr.-Kurdish German-Finnish

ee cummings "listen: there's a hell of a good universe next door; let's go"

universe → corner, corner → angle, next door → side door, or neighbor, let's go → go...

listen: there is a hell of a good scholar next door; let's go listen: there's a damn good scientist nearby; let's go Listen. There is a great scholar next door. let's go listen: next to hell there is a good scientist; let's go Try to hear. There is a wonderful researcher next door. let's go listen: next to hell there is a good scientist; let's go Listen: Next door is the hell of a talented scholar. Alright, let's go listen: she has a hell of a good scholar by her side; let's go Listen: Next to it is the hell of a talented scientist. Alright, let's go listen: he's got a damn good researcher by his side; let's go Listen. There is a brilliant scientist next door. let's go listen. There is a wonderful scientist next door. Alright, let's go listen: she has a hell of a good scholar by her side; let's go

somehow it turns into visiting scientists....

Listen: Next door is a good cosmic hell. Alright, let's go

after 374 collisions, Haitian Creole to Japanese to English says,

"are we having fun yet?"

Yes!! Despite a shitty UI. Even just reading the transcript is entertaining.

Is it funny?

Frequently :)

WIP, but observations

- The "fun" is in both the random elements of the intersections and languages

- And in decoding how they happen(ed) - what went "wrong?" In which language pairs?

- Lots of potential for app toy designs, interaction, or games, here!

Too bad front-end coding is so hard these days :)

A less fun but related network effect: WoW Corrupted Blood Pandemic

Article in The Lancet by epidemiologists

and obviously some RL multi-agent gym environments

I plan to try some of the RL envs, and maybe code up a 2d agent-based game. In my copious spare time.

A Telephone Game Jam

in the "toy" that is Midjourney

making a social game out of a toy

Reminder that Midjourney is a text2image site, where you give an AI model a text prompt, and it makes an image from it, based on what it knows about from training data (which contains both text and images)

"Yesteryear's miming goons:: Oh vertigo awaits:: Bring ghastly tragic lore!::" @Sarah Northway

Yes, terriers mining guns::1 Over tea go, a wit::1 Brine gas tree::1 magic law::1 - @Ancient Chaos

Some of us play this a little every day. The colons in these prompts are commands to the model to chunk concepts, and sometimes add a weight.

It is being given word salad, so what it comes up with is always a surprise. That is fun.

we're playing by different internal self-imposed rules :)

I'm always trying to make English phrases, less word salad... my friends didn't like me calling them chaotic evil for their punny salad, they claim they are chaotic neutral.

Discussions of word salad... results - it's always interesting how it will interpret these weird prompts.

Delight strikes when we recognize patterns but are surprised by them.

Ralph Koster, Theory of Fun for Game Design

I think almost all of these text2image models inspire delight now.

57.5K Likes.

This is a lot of delight. Also, Jenni is fun and funny and you should follow her.

Classifying, collating, and exercising power over the contents of a space is one of the fundamental lessons of all kinds of gameplay.

-Ralph Koster, Theory of Fun for Game Design

A black & white cat in heaven

art therapy, self-expression, discovery...

Some people use Midjourney (and probably other t2i tools) for therapy or self-expression during hard times.

I was interested to see how strong the "black and white" part of this prompt was on the output. Not all variations were actually b&w, but the cat is.

Most AI research isn't about watching people use the tools, and try to build real things, and feel engaged and joyful with them...

- my heavy paraphrase of David Holz (Midjourney founder) voice chat office hours yesterday

yes there are workshops and CHI and UIST etc but for "big tech" AI labs, research incentives differ and often aren't about end-use, but about tables of SOTA scores

One reason I like hanging out on Midjourney so much, the social side is often very interesting in this context, and we need more labs making huge playgrounds, not just creating expensive un-shareable APIs

Telephone "Games" with Art Gen Tools

WIPs with models talking to models...

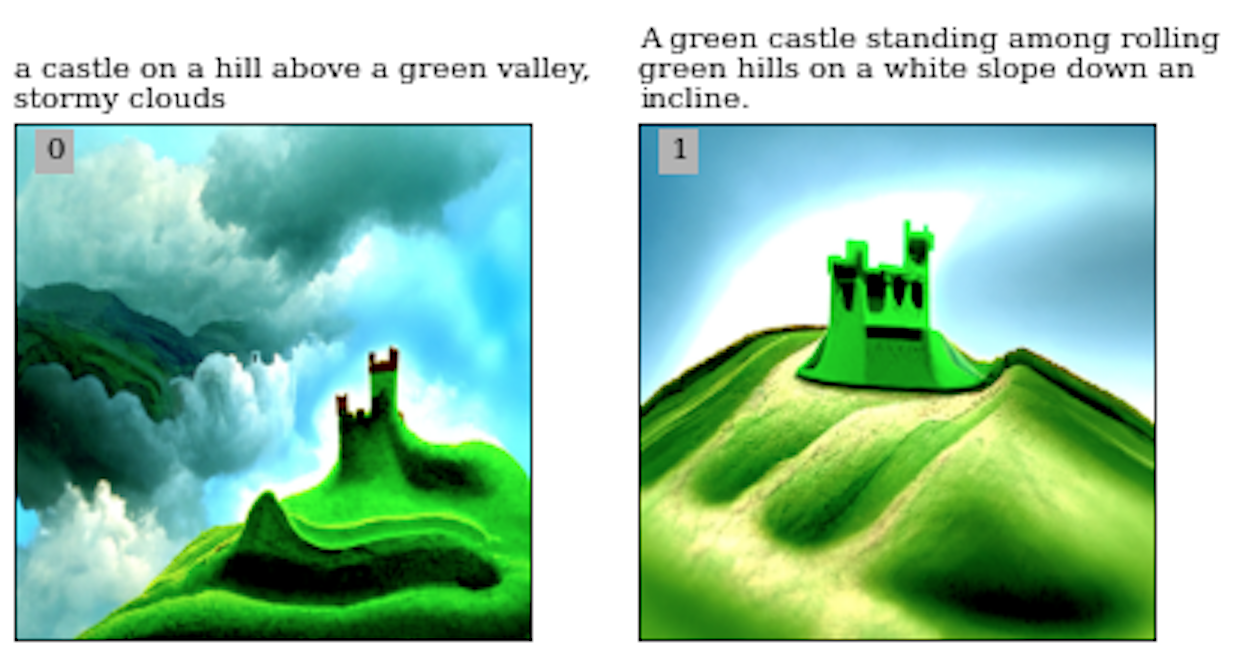

1. Starting prompt: "A lonely traveler walked by a lake"

2. Generate image with Disco Diffusion

3. Caption by an image2text app

(Goto 2)

(Constructed using JinaAI's wrapper for disco diffusion and Antarctic Captions by dzryk)

4. Write markdown from data.

This requires APIs/OS code to construct; and it runs slowly, due to DD generation time.

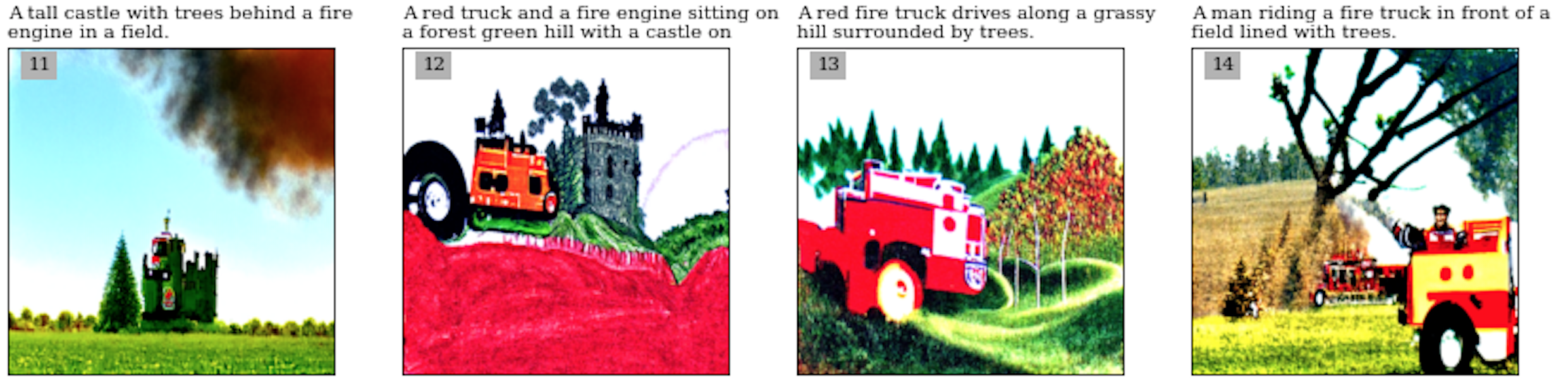

A grid view of the prompt used to generate each image, and the image. Each prompt is the top caption of the previous image. In a perfect AI model world, there would be no drift at all in contents/images.

How it started

my input text is on 0, the top caption is the prompt for 1

How it ends...

Actually, the fire: there was a data glitch...

"A castle sitting on a grassy field topped by trees next to a wooden fire hyd"

AC has some text glitches - I think Disco Diffusion didn't know what to make of "fire hyd" and just made a wood fire.

These telephone games remind me of looking at fog over the hills,

except much weirder, obviously

How it started...

Using

GPT3

with the

captions

and genre

prompting...

still not superb.

WIP.

For this one, I added GPT-3 by API to try to get new content via genre related prompting. Meh?

"are we having fun?"

Uh, meh?

Is it funny?

funny weird, but not that funny ha-ha.

Observations

- Captioning has a heavy influence on output - and it's glitchy. In a fun way?

- Some image generation models are less good/interesting at reproducing the captions - so there's some "drift."

- Making this real-time, as an interactive toy? Would improve it. We need an API and a very fast captioning tool and generation engine (like Midjourney, not Disco Diffusion in a colab).

Once OpenAI give us an API for Dalle-2, the options open up a bit more for interactive fun.

Dalle-2 given: "A woman looks out the window of a fairy-tale castle"

Antarctic Captions say...

Without an API for Dalle-2, I tried some hand-cut-and-paste using the same captioning model.

Dalle-2 given the top caption: "A woman looking out from a window at a castle"

Antarctic captions struggles... glitches... opportunity for drift...

say we pick #2...

Dalle-2 given caption 2: "A man looks out a window outside through the curtains of a door of a castle."

I stopped here to finish my slides.

"are we having fun yet?"

actually, yes! even hand-pasting stuff and fixing pytorch version bugs.

and it's a good way to model what it would be like in a real interactive app, with a UI.

It was only funny in the errors, I guess, but the better images definitely helped the experience.

Note that overnight, Antarctic Captions' colab stopped working because of an update in pytorch-lightning and I had to file an issue. These tools are SO FRAGILE.

Inpainting with Dalle-2, and the glitch.

I think in-painting and outpainting are a form of fan art edit remix.

I haven't had time to try it myself yet, I only just got access.

source link

The sublime...

...to the ridiculous?

You should watch the source link - the music is a tad pretentious, and the end view made me LOL.

little disfluencies that don't make total sense...

When you zoom in and look closely

I'm afraid to zoom in

Red Dead Redemption 2

"The Grannies" article

This is a lovely movie (not online) about explorers of the open space in RDR2, finding landscape bugs and exploring those. Sadly, it was all patched.

MS Flight Sim Bugs

A bunch of interesting things related to RDR2's bugs: Players can see how these glitches may have happened, and universally people try to do stuff like land on the top of the skyscraper (or bridge on the left, but it crashes the game). Fun and funny bugs!

Dwarf Fortress bug reports follow...

they are often funny, always fun, and regularly you wonder, "is this a bug, or genuine emergent behavior? should it be stopped or encouraged?" Not like a blood plague, but maybe a cat plague...

I've been illustrating some in MJ, with no style commands or anything. It's so fun. If there were an API for MJ, I'd make a bot to connect the two bots, but.

Same bug illustrated in Dalle-2 -- a very different model of a universe.

Evidently business man in the London Underground? Clearly a very different image model. I think Dalle-2 does much better given style direction. I might suck up the cost and do a DF bug illustration bot when there's an API, because it will be very weird.

models and data digression...

Speaking of different models -- we should.

"Frankenstein's Telephone" by J. Rosenbaum

link, and see thread of recent attempts with non-binary persons imagined by Midjourney and Dalle-2... which follow:

The caption spawns an image, which is classified and divided into colored segments depending on what the neural network sees, another image is created using those abstract sections as a guide and a final caption is generated.

visualizing transgender and non-binary people

A previous telephone game, with several models. I hadn't seen this till recently.

AttnGAN

2017

Midjourney

now

Dalle-2

now

thread link

Aesthetic/Artistic/Affective Models and Datasets

We need lots of models, for lots of purposes, and especially artistic ones, for expressing less literal concepts. Here's a few stabs in this direction...

People have biases and so do models/datasets and more so datasets built on people's use of models, etc

For example one user really likes using the model to draw a 'world tree', or the earth represented as a giant tree.

Telephone game

We are in a telephone game of labeling, too - only seeing/rating outputs of models is a direction of bias, of course (just to be aware of)

George Perec, An Attempt at Exhausting a Place in Paris

A clever commentary on the book

“Obvious limits to such an undertaking: even when my only goal is just to observe, I don’t see what takes place a few meters from me: I don’t notice, for example, that cars are parking.”

Also: why are two nuns more interesting than two other passersby? A little girl, flanked by her parents (or by her kidnappers) is weeping.

a literary digression on labeling from a human perspective: Perec tries to note down what he sees in 3 days in St Sulpice. And then he notices what he notices. And wonders at interpretation.

KaliYuga's Pixel Art Diffusion

i'd like to see more

custom models - and

datasets - and annotation,

in both text and image

creativity tool spaces

KaliYuga also wrote a how-to on training your own diffusion model based on making this pixel art diffusion model. So wonderful.

Art is made possible only by the richness of the fantasy world we share.

- Chris Crawford, Art of Computer Game Design

or, of the model we share? or the models we create that we share?

Thank you to...

The people who publish colabs for cool applications of their research or their art tools (not just stats)

The academics who help out an independent "artist"/tinkerer (eg, David Bamman)

The companies like MidJourney who are making things for people to use for fun and art - and friends there

The artists and tool builders who make open source, share data sets, publish how-to's

And finally, thanks!

if you liked this, you might really like my newsletter:

(once a month, creative AI/games/story links)

me on Twitter: @arnicas - maybe more data sciencey?

And Medium articles.

arnicas@gmail.com

Slide with questions I got follows.

Questions I got after the talk:

- "Do you know Allison Parrish?" Yes, yes I do. A huge influence on my thinking.

- "Have you found any evidence of a secret language in Midjourney?" No, despite the weird telephone jam we play. That's the only secret language, apart from general #promptcraft.

- "What do you think of AI Dungeon?" I think it's fun for a little while, but mostly I am not a fan of GPT-3 text output raw. It loses the plot, wanders, is often bad writing. I do enjoy SudoWrite though, which I am paying for to play with a bit more... their UX is "fun."

Text Toys

By Lynn Cherny

Text Toys

- 3,934