Product Reviews with NLP: Analysis and ElasticSearch

Lynn Cherny, Ph.D.

Code: https://github.com/arnicas/nlp_elasticsearch_reviews

The (Ambitious) Plan

- Analyzing Yelp Product Reviews with Python

- Indexing and Searching in ElasticSearch

- Front-end in Javascript

All examples assume localhost:9200 for ES.

Code: https://github.com/arnicas/nlp_elasticsearch_reviews

A tutorial in 25 minutes ("highlights")

Analysis with Python

Mostly using Pandas, but some NLTK and gensim

Code: https://github.com/arnicas/nlp_elasticsearch_reviews

Jupyter Notebook with Pandas

Businesses

Tendency for higher ratings

in this dataset.

Review Trends Over Time

You could show this to users in a UI, of course...

Do Some Simple NLP

But you can keep in mind that ES does this for you-- we won't use the tokenization we do here in ES directly.

Most common words

Very positive, unsurprising given star ratings.

Length Turns Up Some Surprises...

Wat?

many more lines....

many more lines....

(lol people are awesome)

Dictionary-based Sentiment

TSNE of top 1500 words

For search results, I made fake names for each business.

I also filtered out some reviews.

The exploration phase gives you some principled places to filter, if you want.

E.g.,

by length, by user id, by topic....

Filtering out some of the reviews -- very short or very long.

And very long ones.

Save dataframes for ES indexing:

one for the review text, one with summary stats for each business.

Note: msgpack is a nice fast format available in pandas for saving these. Save all intermediate results too, because some of the operations take a long time on these df's.

Note: I Threw in Some Topic Modeling too, but didn't use it in the ES side. (You could use it to filter further.)

See my code notebooks for this!

Indexing in Elasticsearch

(and searching)

Code: https://github.com/arnicas/nlp_elasticsearch_reviews

I'm using ES 2.3...

- You can download it (http://www.elastic.co/downloads) and just crack open the dir.

- The config directory is where you can put stopwords files and edit the yaml file for settings.

- Run it from the bin folder: ./bin/elasticsearch.

- Check your local install at localhost:9200 (and 5601 for kibana)

- For more, see the install page.

Get yourself the Sense plugin (installs to Kibana as an app, so install that too.)

https://www.elastic.co/guide/en/sense/current/installing.html

Install the python lib elasticsearch (pip)

https://elasticsearch-py.readthedocs.io/en/master/#

Text "Analysis" in ES

- An index is a collection of related documents.

- Analysis is a process of character filtering, tokenizing (breaking up the text into "units" like words"), and token filters (such as removing stopwords).

- By default, fields are analyzed with the "standard" analyzer. If you store non-text fields, you want to mark it as not_analyzed, when you index it.

https://www.elastic.co/guide/en/elasticsearch/reference/current/analysis.html

Standard Analyzer

vs. the "English" Analyzer

standard: my kitty cat's a pain in the neck

english: my kitti cat pain neck

Custom Analyzers

Create the settings...

Then "use" them.

A gotcha: It will use standard analyzer if it doesn't use yours -- always double check.

In Python, create your index with the mapping, and check it:

Test it!

html stripped, & converted, "a" and "the" are stripped, lowercase applied...

Yelp Data

Load your dataframe with pd.read_msgpack("yelp_df_forES.msg") and define a possible mapping.

Ideally, bulk data load will work; structure like this.

It errors for me, so I do it one-by-one (get a coffee):

Very simple text queries! With "explain" to check why.

localEs.search(index='yelp', doc_type='review', q='pizza-cookie')

Read how it works with tf-idf: https://www.elastic.co/guide/en/elasticsearch/guide/current/relevance-intro.html

Scoring is based on TF-IDF using analyzed text.

TF (term frequency): frequency of a term in a document (relative to length, or number of tokens in the doc)

IDF (inverse document frequency): log of the number of docs containing a term divided by the number of docs.

TF-IDF measures importance of a term in a document, relative to a set of documents.

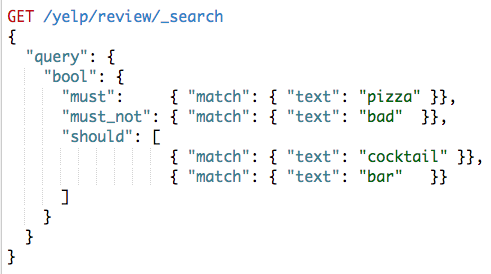

Lots of query types: e.g., Boolean Queries

"More Like This"

https://www.elastic.co/guide/en/elasticsearch/reference/2.3/query-dsl-mlt-query.html

Aggregate Queries

also "and" instead of default "or" to match all terms.

Aggregates will count results for you.

Aggregates plus sort by computed stars, as secondary sort.

Result...

"Buckets" with

key and count.

Nice trick: Query for matches against both original and analyzed text

Docs on tricks for more accurate results: https://www.elastic.co/guide/en/elasticsearch/guide/master/most-fields.html

You can also boost one of them to make it weigh more in the score, e.g.,

"fields": ["text^5", "text_orig"]

For the app, Index the business id's too (none analyzed)

Query for an exact match on business id:

Query for other attributes of businesses (non-textual)

Boolean, with must/should/must_not,

and ranges: "gte", "lte" on star ratings

Javascript Front-End

Code: https://github.com/arnicas/nlp_elasticsearch_reviews

The JS package for ES

https://www.elastic.co/guide/en/elasticsearch/client/javascript-api/current/quick-start.html

What Should We Return?

This is your design decision... You have access to ratings over time, sentiment of ratings, terms that match, places that have those ratings....

Let's say we do "places that have those terms, sorted by mean stars."

Get Biz Matches First

I'm matching all words in the query in this example. Do it as you like...

Aggregate counts of matching

businesses + their stars.

- Search all businesses for best matches, aggregate them.

- For each business, search for their stats in the business doc type.

- Create a link with the biz-id for a search for reviews.

Fix the info design, of course :)

Use the biz id stored in the link text to allow a new search limited to the business id,

to get matches with the search text (we sort by date)

Searching for matches in the single business:

Possible UX improvements

- Filter by star ratings, score, recency of ratings, sentiment...

- Allow looser search ("or" not "and"), or

- Consider doing "and" but falling back to an "or"

- Show scores in results (in some normalized nice way)

- Try search against multiple fields (text and text_orig), or use multiple stemming/stopwords indexing and take best score

- ... etc: Welcome to the wonderful world of query hacking.

Some tips for these kinds of apps

- Explore your data first (and maybe filter it before indexing)

- Come up with some example queries that are hard and realistic

- Start by indexing a subset and play with your mappings for the analysis

- Test-develop your queries in SENSE, then Python

- When you're ready, index the bulk of your data

- Try it in a toy app like mine, to refine your thinking on indexing, queries, filters, reporting....

Thanks!

lynn@ghostweather.com

@arnicas

Code: https://github.com/arnicas/nlp_elasticsearch_reviews